Are incentivized Amazon reviews really unbiased?

Amazon’s Review Dataset consists of metadata and 142.8 million product reviews from May 1996 to July 2014. The dataset contains the ratings, review text, helpfulness, and product metadata, including descriptions, category information, price etc. This dataset has a wealth of information that can inform us about consumers and how they rate or review products.

In particular, the goal of this project was to observe a specific population of consumers—those who receive products for free or at discounted prices in exchange for “honest” reviews.

We asked: Are incentivized Amazon reviews really unbiased?

Amazon’s Review Dataset consists of metadata and 142.8 million product reviews from May 1996 to July 2014. The dataset contains the ratings, review text, helpfulness, and product metadata, including descriptions, category information, price etc. This dataset has a wealth of information that can inform us about consumers and how they rate or review products.

In particular, the goal of this project was to observe a specific population of consumers—those who receive products for free or at discounted prices in exchange for “honest” reviews.

We asked: Are incentivized Amazon reviews really unbiased?

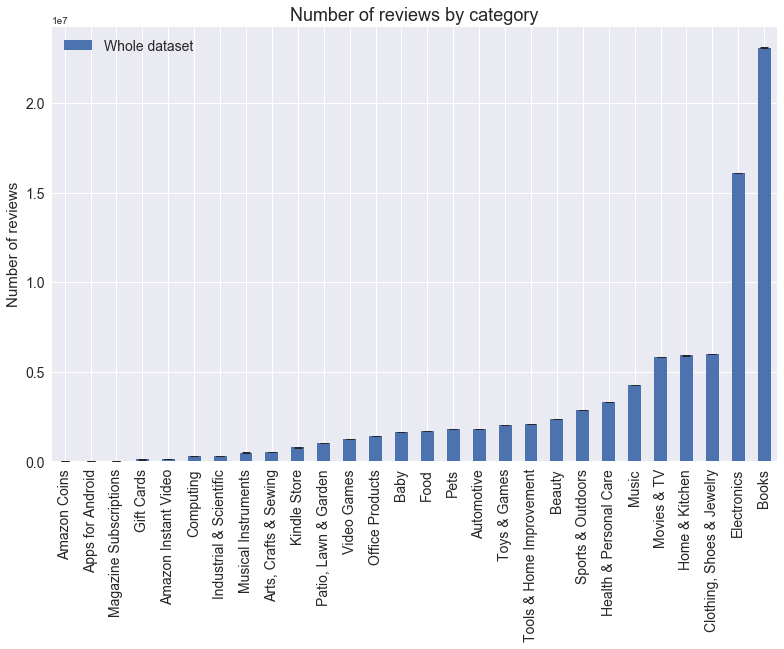

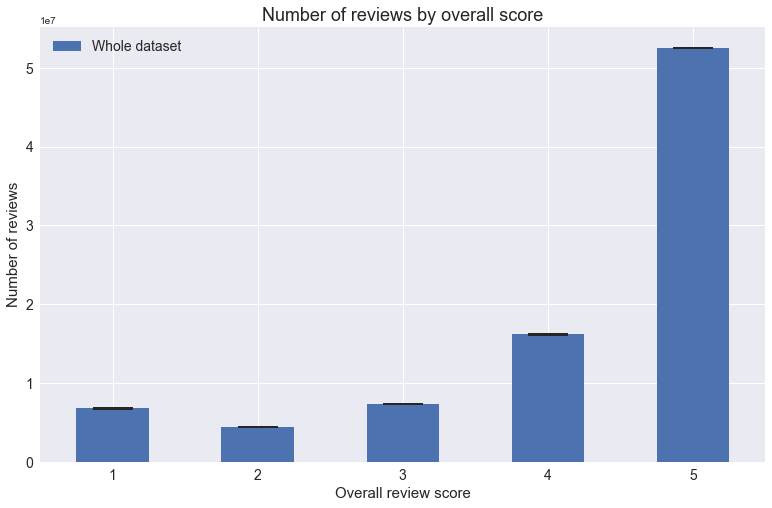

First, we start out by getting a sense of what the dataset looks like. How many reviews are there per category? How many reviews gave 5 stars? 4 stars? etc. How long are the reviews and how positive are they?We first started out looking through our dataset to see any trends and observe the overall characteristics.

We observe that the Electronics and Books categories have the most reviews and most products have 5-star ratings.

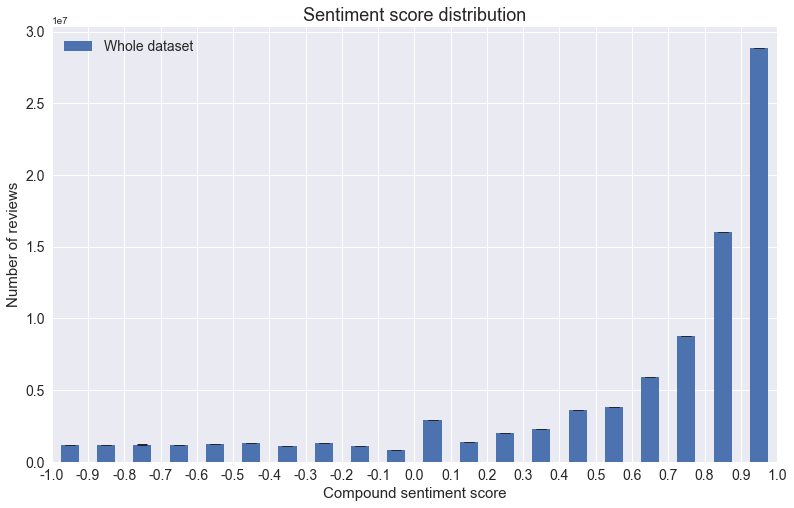

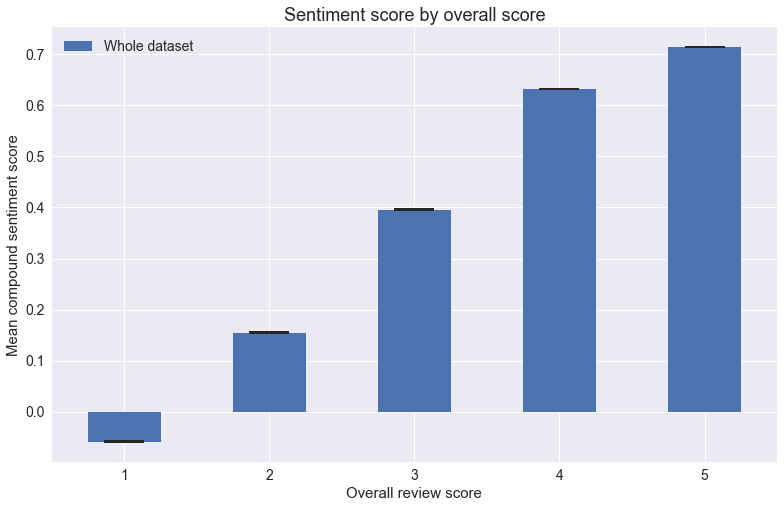

We didn’t just want to use review ratings as a measure of product quality or consumer satisfaction. How positively or negatively people write the reviews can be a good indication of what they think about the product. So we utilized the VADER sentiment analysis tool to measure the sentiment in the review text.

Above a compound sentiment score of 0.5, indicates a more positive sentiment, and below a compound sentiment score of -0.5 indicates a more negative sentiment. In between those scores is considered neutral sentiment.

We can see that most reviews are written with positive language. This adds to the fact that most reviews are rated 5 stars. And when we look at how sentiment score relates to the overall review star rating, the two scores are correlated. We can observe that the 1-star, 2-star reviews are not written with extremely negative language because the average compound sentiment score is just below zero to 0.15. This is considered the “neutral” range according to the VADER sentiment analysis tool documentation. Because there are not many extremely negative reviews, it is possible that Amazon removed these types of reviews or perhaps consumers innately write more positively than how they really feel. This could be due to the fact that these reviews are public.

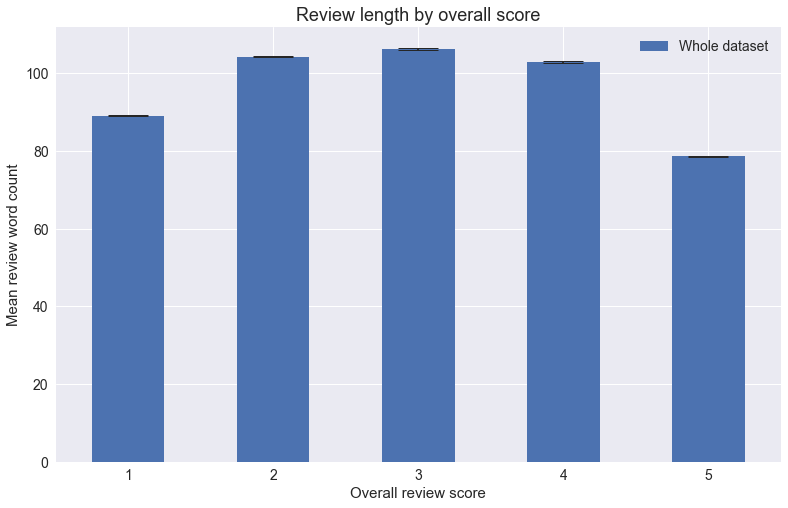

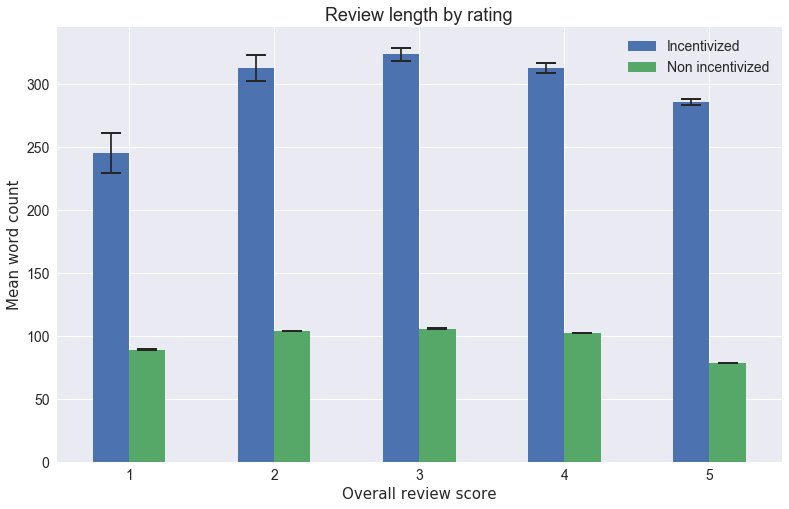

Finally, we also looked at review length as an indicator for how strongly someone felt about products. Interestingly, 5-star reviews were on average the shortest in length, then 1-star reviews. 2-, 3-, 4- star reviews were the longest. We think that this is because if a product is given 5-stars, there is not much for the consumer to say except that it works as intended. With the in-between ratings, there is more to say about the product because it wasn’t the worst product or the best product and the consumer will usually explain why, and this may require a longer review.

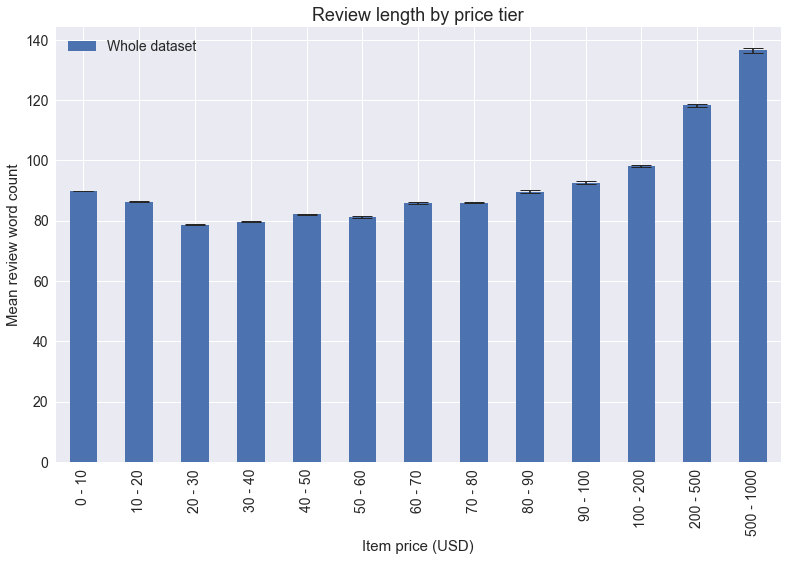

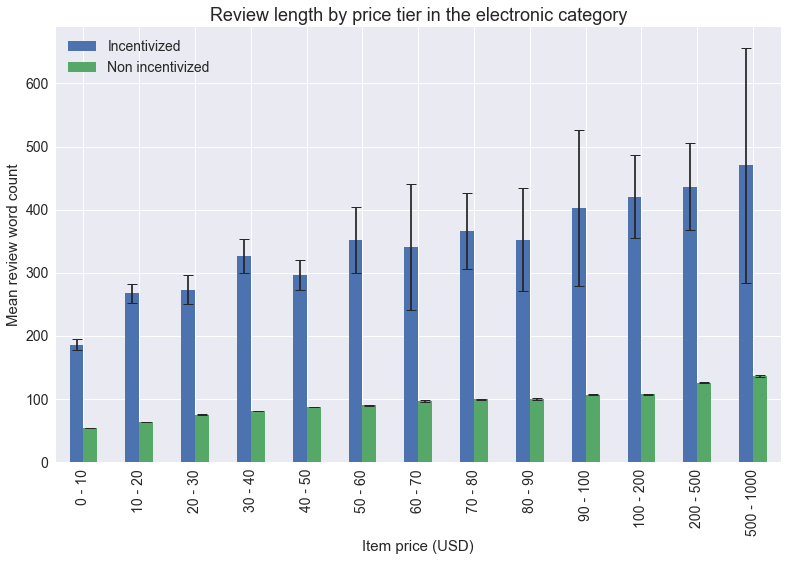

Another interesting trend we saw was that more expensive products had longer reviews. Perhaps people feel more inclined to explain and justify their purchases when the price is high.

Here we delve a bit deeper into the dataset to see whether incentivized reviews were different from non-incentivized reviews. We first separated the reviews into an incentivized reviews set and an incentivized set.

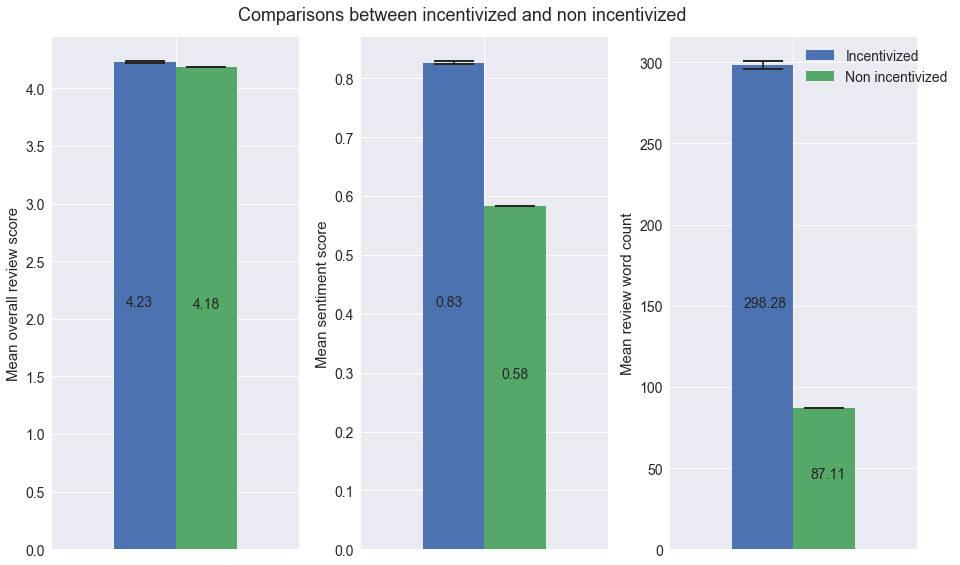

As we explored the data, we looked at the average overall review score, positive sentiment, and review length between the two groups. At first glance, the incentivized reviews have higher overall rating score, more positive sentiment, and longer reviews. The overall review score isn't much higher in the incentivized compared to the non-incentivized; however, the difference may not be as evident because as we saw in the whole dataset above, products are generally given high ratings (4-, 5-stars). If the distribution of given overall ratings were more equally distributed, there may have been more of an obvious difference.

What's even more interesting to note is the sentiment in the review text and the review length. It seems that incentivized reviews are written more positively and are generally much longer than non-incentivized reviews. Didn't people writing the incentivized reviews say that their reviews are "honest and unbiased"? Perhaps even if the reviewer didn't know they were being biased, they were unknowingly writing more positive and longer reviews because they received the product for free. Or on the other hand, because they received the products for free, they were being as positive as possible and thorough with their review so that they could receive more products for free in the future. We don't know the motives of each and every incentivized review reviewer; however, from exploring the data a little deeper, we can now say:

No, incentivized reviews are NOT unbiased.

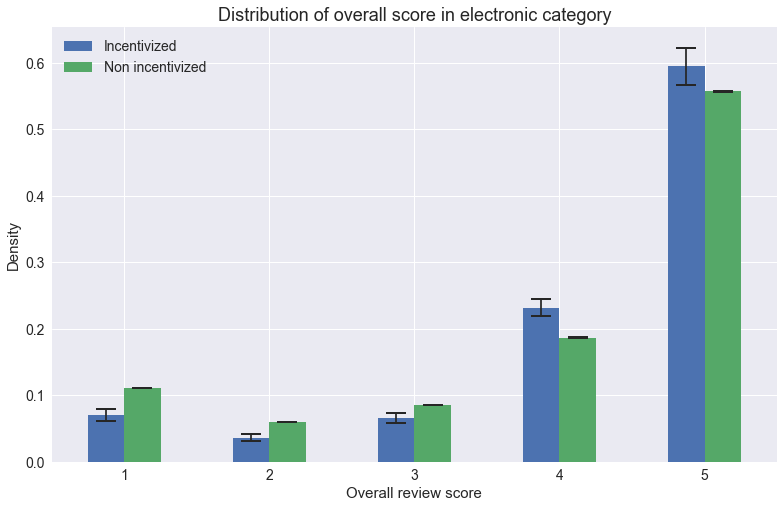

So, how exactly are the overall review scores distributed? We can see from the plot above that incentivized reviews have less 5-star reviews than nonincentivized reviews. However, incentivized reviewers give less 1-, 2-star reviews than nonincentivized reviewers, and many more 4-star reviews.

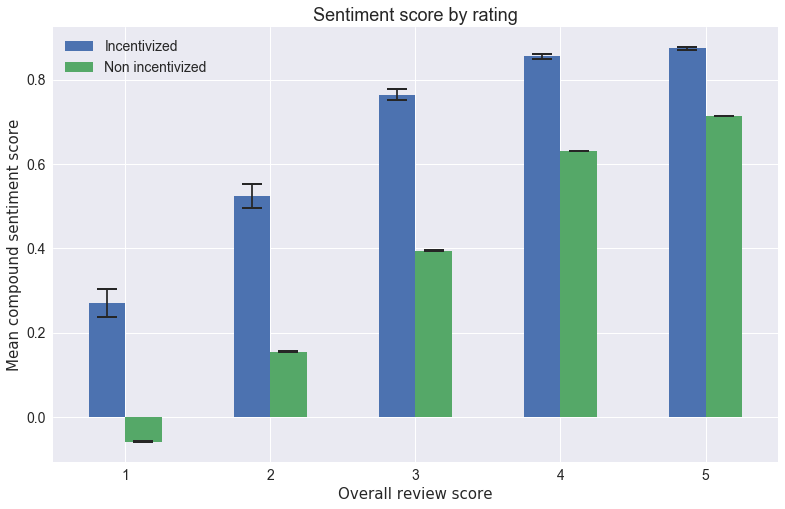

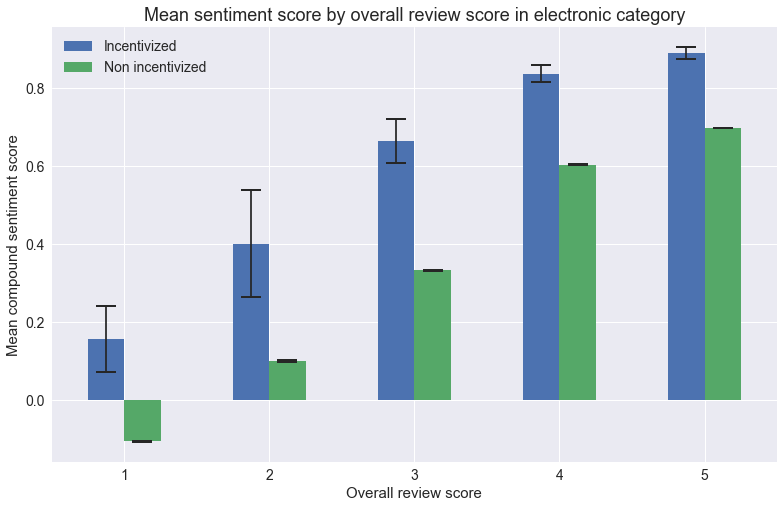

But how positively (or negatively) do incentivized reviewers write their reviews based on the overall review score? Let’s take a look.

Here, we can see something very consistent among all review scores given by incentivized reviewers: Reviewers don’t write negatively even about 1-star products. Based on the sentiment scores, it appears that incentivized reviewers, although they may give low-star ratings, they use neutral to more positive language in their reviews when compared to reviews from nonincentivized reviewers. Since incentivized reviewers received the product for free (or at a discounted price), they are not negative in the actual review text. In addition, we see that the review length, in general over all review scores, is always longer than non-incentivized reviews. Let's look more in detail and zoom into one category. The category of choice is Electronics:

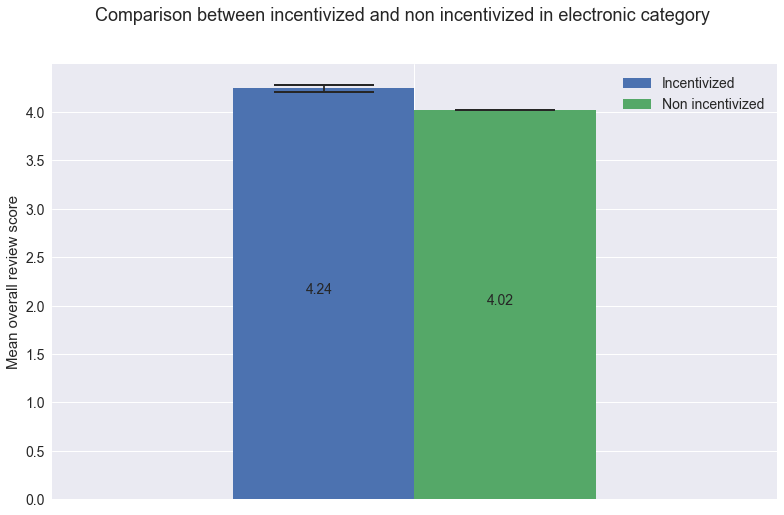

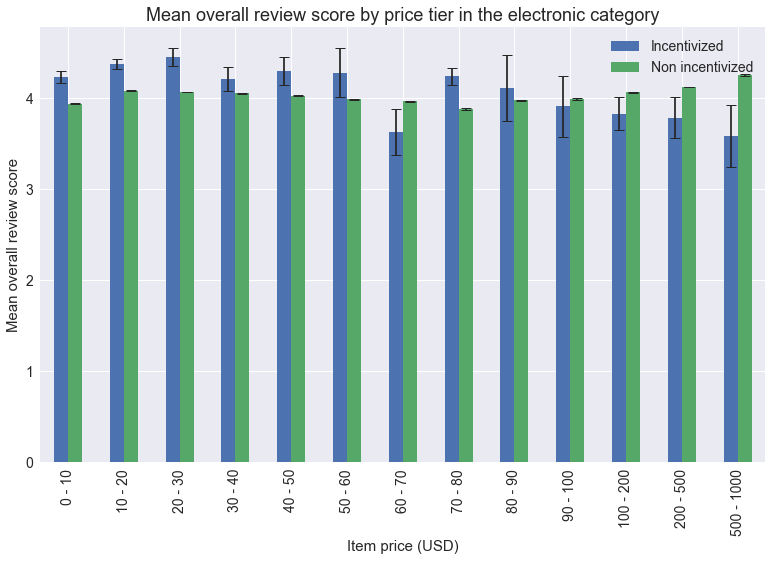

In the electronics category, the difference in average overall rating score is more obvious between the incentivized group and the non-incentivized group, 4.24 vs 4.02, respectively. The statistically significant difference indicates that when reviewers receive an electronics product for free, they tend to rate the product higher than those who purchase the product at full price. In addition, the distribution of stars given for products is different between the two groups. Reviewers who receive the electronics products for free give more 4- and 5-star ratings and less 1-, 2-, 3-star ratings than reviewers who purchase the product at full-price.

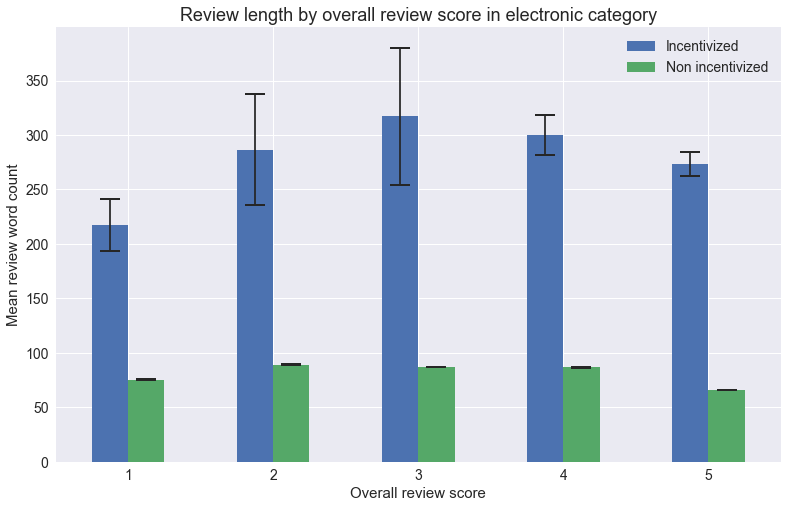

With mean sentiment score and mean review length for each overall review score in the electronics category, we see the same trend. There is more positive sentiment for all review scores, even the reviews with 1-star ratings, and all incentivized reviews are longer than the non-incentivized ones.

With mean sentiment score and mean review length for each overall review score in the electronics category, we see the same trend. There is more positive sentiment for all review scores, even the reviews with 1-star ratings, and all incentivized reviews are longer than the non-incentivized ones.

In conclusion, we saw many interesting features of incentivized reviews. In general, incentivized reviews were more positively written, longer in length, and rated higher. This was particularly evident in the electronics category, where average overall score given by incentivized reviewers was 4.24 and 4.02 by non-incentivized reviewers. In the future, more work can be done to see what words or adjectives are more frequently used in incentivized reviews and whether the trend of higher ratings for incentivized reviews can be observed in one individual reviewer.